212x Filetype PDF File size 2.49 MB Source: ailb-web.ing.unimore.it

FashionGraph

understanding fashion data using scene graph generation

Shabnam Sadegharmaki1; Marc A. Kastner2; Shin'ichi Satoh2

1Technical University of Munich, 2National Institute of Informatics, Tokyo

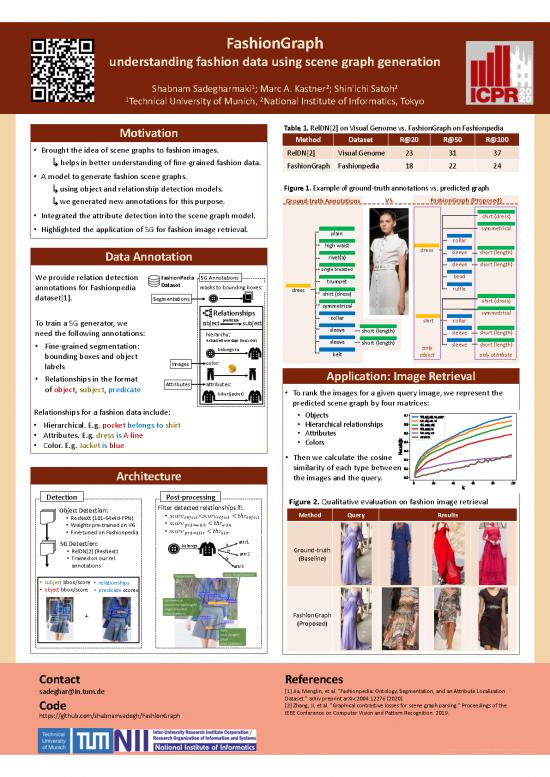

Motivation Table 1. RelDN[2] on Visual Genome vs. FashionGraph on Fashionpedia

Method Dataset R@20 R@50 R@100

• Brought the idea of scene graphs to fashion images. RelDN[2] Visual Genome 23 31 37

↳helps in better understanding of fine-grained fashion data. FashionGraph Fashionpedia 18 22 24

• A model to generate fashion scene graphs.

↳using object and relationship detection models. Figure 1. Example of ground-truth annotations vs. predicted graph

↳we generated new annotations for this purpose. Ground-truth Annotations VS. FashionGraph(Proposed)

• Integrated the attribute detection into the scene graph model. shirt (dress)

• Highlighted the application of SG for fashion image retrieval. plain symmetrical

high waist collar

dress sleeve short (length)

Data Annotation rivet(a)

single breasted sleeve short (length)

We provide relation detection FashionPedia SG Annotations trumpet bead

annotations for Fashionpedia Dataset masks to bounding boxes: dress ruffle

dataset[1]. Segmentations shirt (dress)

symmetrical shirt (dress)

Relationships collar symmetrical

To train a SG generator, we object predicate subject shirt collar

need the following annotations: hierarchy: sleeve short (length) sleeve short (length)

• Fine-grained segmentation: extracted overlaps (iou > 0.9) sleeve short (length) sleeve short (length)

belongs to only

bounding boxes and object belt object only attribute

labels Images color:

• Relationships in the format Application: Image Retrieval

of object, subject, predicate Attributes attributes:

biker(jacket) • To rank the images for a given query image, we represent the

predicted scene graph by four matrices:

Relationships for a fashion data include: • Objects

• Hierarchical. E.g. pocket belongs to shirt • Hierarchical relationships

• Attributes. E.g. dress is A-line • Attributes

• Color. E.g. Jacket is blue • Colors

• Then we calculate the cosine

Architecture similarity of each type between

the images and the query.

Detection Post-processing Figure 2. Qualitative evaluation on fashion image retrieval

Object Detection: Filter detected relationships if:

• ������������������������������ ×������������������������������ <������ℎ������ Method Query Results

• ResNeXt(101-64x4d-FPN) !"#$%& '"#$%& !"#$%&

• ������������������������������ <������ℎ������

• Weights pre-trained on VG • ������������������������������()*+,-&. < ������ℎ������,-&.

• Fine-tuned on Fashionpedia ()*+/&&) /&&) attr1

SG Detection: belongs is

• RelDN[2] (ResNext) is attr2 Ground-truth

• Trained on our rel. is (Baseline)

annotations attr3

• subject bbox/score • relationships

• object bbox/score • predicate scores

+ FashionGraph

(Proposed)

Contact References

sadeghar@in.tum.de [1] Jia, Menglin, et al. "Fashionpedia: Ontology, Segmentation, and an Attribute Localization

Dataset." arXiv preprint arXiv:2004.12276 (2020).

Code [2] Zhang, Ji, et al. "Graphical contrastive losses for scene graph parsing." Proceedings of the

https://github.com/shabnamsadegh/FashionGraph IEEE Conference on Computer Vision and Pattern Recognition. 2019.

no reviews yet

Please Login to review.