198x Filetype PDF File size 2.46 MB Source: www.csee.umbc.edu

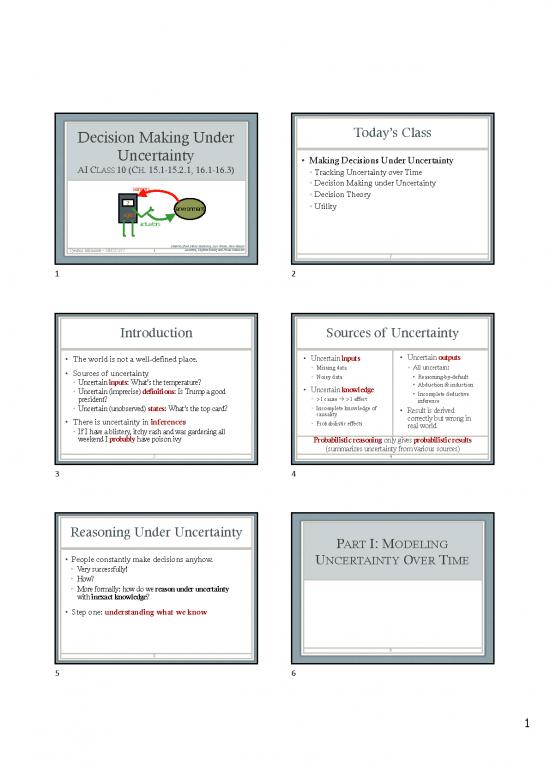

Decision Making Under Today’s Class

Uncertainty • Making Decisions Under Uncertainty

AI CLASS10 (CH. 15.1-15.2.1, 16.1-16.3) • Tracking Uncertainty over Time

sensors • Decision Making under Uncertainty

• Decision Theory

? environment • Utility

agent

actuators

Material from Marie desJardin, Lise Getoor, Jean-Claude

Cynthia Matuszek – CMSC 671 1 Latombe, Daphne Koller, and Paula Matuszek

2

1 2

Introduction Sources of Uncertainty

• The world is not a well-defined place. • Uncertain inputs • Uncertain outputs

• Sources of uncertainty • Missing data • All uncertain:

• Uncertain inputs: What’s the temperature? • Noisy data • Reasoning-by-default

• Uncertain (imprecise) definitions: Is Trump a good • Uncertain knowledge • Abduction & induction

president? • >1 cause à >1 effect • Incomplete deductive

inference

• Uncertain (unobserved) states: What’s the top card? • Incomplete knowledge of • Result is derived

causality correctly but wrong in

• There is uncertainty in inferences • Probabilistic effects real world

• If I have a blistery, itchy rash and was gardening all

weekend I probably have poison ivy Probabilistic reasoning only gives probabilistic results

(summarizes uncertainty from various sources)

3 4

3 4

Reasoning Under Uncertainty

PARTI: MODELING

• People constantly make decisions anyhow. UNCERTAINTYOVERTIME

• Very successfully!

• How?

• More formally: how do we reason under uncertainty

with inexact knowledge?

• Step one: understanding what we know

6

5

5 6

1

States and Observations Temporal Probabilistic Agent

• Agents don’t have a continuous view of world sensors

• People don’t either!

• We see things as a series of snapshots: ?

• Observations, associated with time slices environment

• t , t , t , … agent

1 2 3 actuators

• Each snapshot contains all variables, observed or not

• X = (unobserved) state variables at time t; observation at t is E

t t t1, t2, t3, …

• This is world state at time t

7 8

7 8

Uncertainty and Time Uncertainty and Time

• The world changes • Basic idea:

• Examples: diabetes management, traffic monitoring • Copy state and evidence variables for each time step

• Tasks: track changes; predict changes • Model uncertainty in change over time

• Incorporate new observations as they arrive

• Basic idea: • X = unobserved/unobservable state variables at time t:

• For each time step, copy state and evidence variables t

BloodSugar , StomachContents

• Model uncertainty in change over time (the Δ) t t

• E = evidence variables at time t:

• Incorporate new observations as they arrive t

MeasuredBloodSugar , PulseRate , FoodEaten

t t t

• Assuming discrete time steps

9 10

9 10

States (more formally) Observations (more formally)

• Change is viewed as series of snapshots • Time slice (a set of random variables indexed by t):

• Time slices/timesteps 1. the set of unobservable state variables X

t

• Each describing the state of the world at a particular time 2. the set of observable evidence variables Et

• So we also refer to these as states • An observation is a set of observed variable

• Each time slice/timestep/state is represented as a instantiations at some timestep

set of random variables indexed by t: • Observation at time t: E = e

1. the set of unobservable state variables X t t

t • (for some values e)

2. the set of observable evidence variables E t

t

• X denotes the set of variables from X to X

a:b a b

11 12

11 12

2

Transition and Sensor Models Markov Assumption(s)

• So how do we model change over time? • Markov Assumption:

• Transition model This can get • Xt depends on some finite (usually fixed) number of previous Xi’s

exponentially • First-order Markov process: P(X|X ) = P(X|X )

• Models how the world changes over time large… t 0:t-1 t t-1

• Specifies a probability distribution… • kth order: depends on previous k time steps

• Over state variables at time t P(X | X )

• Given values at previous times t 0:t-1

• Sensor model • Sensor Markov assumption: P(E|X , E ) = P(E|X)

• Models how evidence (sensor data) gets its values t 0:t 0:t-1 t t

• E.g.: BloodSugart àMeasuredBloodSugart • Agent’s observations depend only on actual current state of the world

13 14

13 14

Stationary Process Complete Joint Distribution

• Infinitely many possible values of t • Given:

• Transition model: P(X|X )

• Does each timestep need a distribution? t t-1

• Sensor model: P(E|X)

• That is, do we need a distribution of what the world looks like at t t

• Prior probability: P(X )

t , given t AND a distribution for t given t AND … 0

3 2 16 15

• Assume stationary process: • Then we can specify a complete joint distribution

• Changes in the world state are governed by laws that do of a sequence of states:

not themselves change over time P(X ,X,...,X ,E ,...,E )= P(X ) t P(X | X )P(E |X )

• Transition model P(X|X ) and sensor model P(E|X) 0 1 t 1 t 0 ∏ i i−1 i i

t t-1 t t i=1

are time-invariant, i.e., they are the same for all t

• What’s the joint probability of instantiations?

15 16

15 16

Example Inference Tasks

Rt-1 P(Rt| Rt-1) Weather has a 30% chance • Filtering or monitoring: P(X|e ,…,e ):

t 0.7 of changing and a 70% t 1 t

f 0.3 chance of staying the same. • Compute the current belief state, given all evidence to date

• Prediction: P(X |e ,…,e ):

Raint-1 Raint Raint+1 t+k 1 t

• Compute the probability of a future state

• Smoothing: P(X |e ,…, ):

k 1 et

Umbrellat-1 Umbrellat Umbrellat+1 • Compute the probability of a past state (hindsight)

Rt P(Ut| Rt) • Most likely explanation: arg max P(x ,…,x |e ,…,e )

t 0.9 x1,..xt 1 t 1 t

f 0.2 • Given a sequence of observations, find the sequence of states that is

most likely to have generated those observations

Fully worked out HMM for rain: www2.isye.gatech.edu/~yxie77/isye6416_17/Lecture6.pdf 18

17 18

3

Examples Filtering

• Filtering: What is the probability that it is raining today, • Maintain a current state estimate and update it

given all of the umbrella observations up through today? • Instead of looking at all observed values in history

• Prediction: What is the probability that it will rain the day • Also called state estimation

after tomorrow, given all of the umbrella observations up

through today? • Given result of filtering up to time t, agent must

• Smoothing: What is the probability that it rained yesterday, compute result at t+1 from new evidence e :

given all of the umbrella observations through today? t+1

• Most likely explanation: If the umbrella appeared the first P(Xt+1 | e1:t+1) = f(et+1, P(Xt | e1:t))

three days but not on the fourth, what is the most likely … for some function f.

weather sequence to produce these umbrella sightings?

19 20

19 20

Recursive Estimation Recursive Estimation

• P(X | e ) as a function of e and P(X | e ):

1. Project current state forward (t à t+1) t+1 1:t+1 t+1 t 1:t

P(X |e )=P(X |e ,e ) dividing up evidence

2. Update state using new evidence e t+1 1:t+1 t+1 1:t t+1

t+1 =αP(e |X ,e )P(X |e )

t+1 t+1 1:t t+1 1:t Bayes rule

=αP(e |X )P(X |e ) sensor Markov assumption

P(X | e ) as function of e and P(X | e ): t+1 t+1 t+1 1:t

t+1 1:t+1 t+1 t 1:t • P(e | X ) updates with new evidence (from sensor)

P(X+1 | e ) = P(X | e ,e ) t+1 1:t+1

t 1:t+1 t+1 1:t t+1 • One-step prediction by conditioning on current state X:

=αP(e |X )∑P(X |x)P(x |e )

t+1 t+1 t+1 t t 1:t

xt

21 22

21 22

Recursive Estimation Group Exercise: Filtering

P(X | e ) = α P(e | X ) P(X | X ) P(X |e ) We got here, but I don’t know that

• One-step prediction by conditioning on current state X: t +1 1:t+1 t +1 t +1 ∑ t+1 t t 1:t they really understood it. Spent

=αP(e |X )∑P(X |x)P(x |e ) Xt Rt-1 P(Rt|Rt-1) time on the class exercise and told

t+1 t+1 t+1 t t 1:t T 0.7

xt transition current F 0.3 them to do it outside. Definitely

model state Raint-1 Raint Raint+1 one for HW3/final exam.

• …which is what we wanted! €

• So, think of P(X | e ) as a “message” f

t 1:t 1:t+1 Didn’t even start decision making.

• Carried forward along the time steps Umbrellat-1 Umbrellat Umbrellat+1

• Modified at every transition, updated at every new observation Rt P(Ut|Rt)

• This leads to a recursive definition: What is the probability of rain on T 0.9

f = aFORWARD(f , e ) Day 2, given a uniform prior of rain F 0.2

1:t+1 1:t t+1 on Day 0, U1 = true, and U2 = true?

23 24

23 24

4

no reviews yet

Please Login to review.